In multicriteria (multiobjective) optimization, several objective functions have to be minimized simultaneously. Usually, no single point will minimize all given objective functions at once, and so the concept of optimality has to be replaced by the concept of Pareto-optimality or efficiency. A point is called Pareto-optimal or efficient, if there does not exist a different point with the same or smaller objective function values, such that there is a decrease in at least one objective function value.

Two different efficient points will usually be not only quite different from each other in terms of objective function values, but also incomparable with each other: it will not be the case that one point is better than the other one with respect to all objective functions involved. Instead, the quality of the points will change across different objectives. Therefore, we have to gain as much information as possible about the solution set of a given problem, preferably by constructing a well-defined approximation to it.

The figure below illustrates the solution concept as well as the main problem: a set of feasible points X (green, left) in the decision space is mapped by a vector-valued function f (each coordinate one criterion) into the image space. The result is the yellow set f(X) (right) in the value space. The "lower left-hand" boundary (red) of this yellow set is the image of the solution set of the given multiobjective optimisation problem. These red points are incomparable with each other, and without further knowledge a decision-maker is better served by knowing as much about the solution set as possible. (Note that the decision space, and consequently X, are usually high-dimensional, but f(X) is not.)

(Of course, in such a simple two-dimensional example, one might argue that a decision-maker is only propery interested in points on the "red curve" on the right hand side that are close to the origin and that can, say, be characterized by the curvature of the set under consideration. In two-criteria (bciriteria) optimization this is correct, and this is precisely the reason why two-criteria optimization is no longer an interesting research topic. If more than two criteria is present, the concept of curvature of the set of efficient points can no longer be employed by a rational decision maker. The reader should keep in mind that illustrations serve only illustrative purposes.)

Do we really need to construct an approximation to the full or even a large part of the "red curve"?

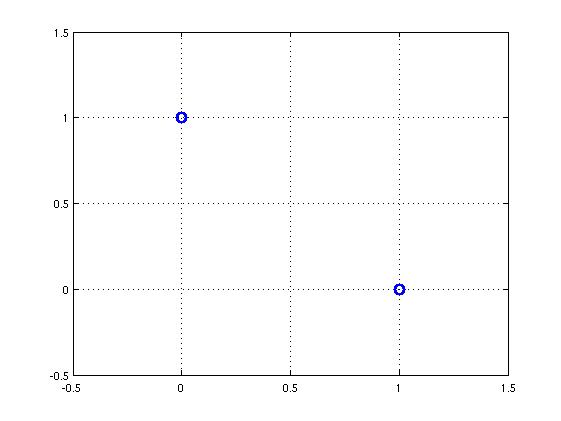

Consider the following (nonlinear, albeit convex) two-criteria example. The decision space X is the euclidean plane, and the two objective functions fi ( i = 1, 2) are given by fi (x) = || x - ai || with a1 = (1, 0) and a2 = (0, 1). (Keen readers will immediately discern the set of efficient points.) Consider now a decision maker that is faced with the following information. He is given the graphic

as well as the following information: "The image of the set of efficient points consists of the two blue points as well as the line connecting them." Now, this information is

(And, of course, a table or database connecting the points displayed in the graphic above with their preimages in the decision space.)

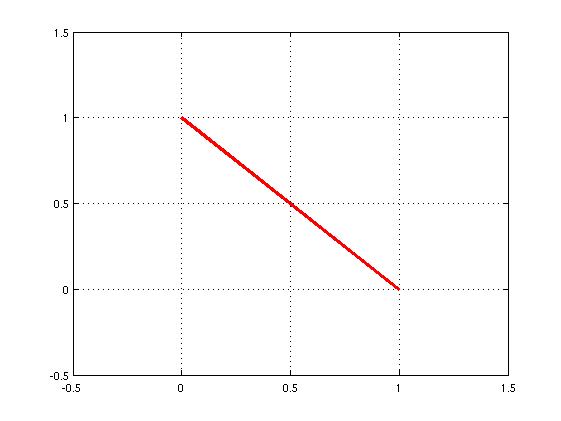

By the way, the actual image of the set of efficient points is

From the discussion above, it is clear that we need indeed to cover the set of efficient points with a cloud of explicitly calculated points. A rough approximation by only a few points and a recourse to interpolation will not do.

Still, the "endpoints" of the red curve are certainly of less importance than points in the "middle" of the image of the set of efficient points, or?

That, of course, depends very much on the problem at hand. First of all, such "endpoints" or "extreme points" correspond to decisions in which only one of the objectives is minimized, to the total neglect of all others. As such, a decision maker might very well want to know at least roughly what could be achieved by following such strategy, if only to get more insight into the problem at hand. But how do you know that you are not interested in these points if you do not know where they are? Deciding that such points should be deleted from further considerations (and possibly also points in a certain neighbourhood around them) can only be done after knowing their precise location in the value space: they have to be computed, albeit possibly with lower accuracy.

Computing one efficient point is, in principle, rather simple: there exists a host of strategies of transforming a multiobjective problem into a family of parametrized (i.e. weighted) single-objective problems. One fixes the weights (the parameters), and one might have hope that standard software might be able to generate one efficient point within reasonable time.

So how does one generate many efficient points efficiently, i.e. quickly?

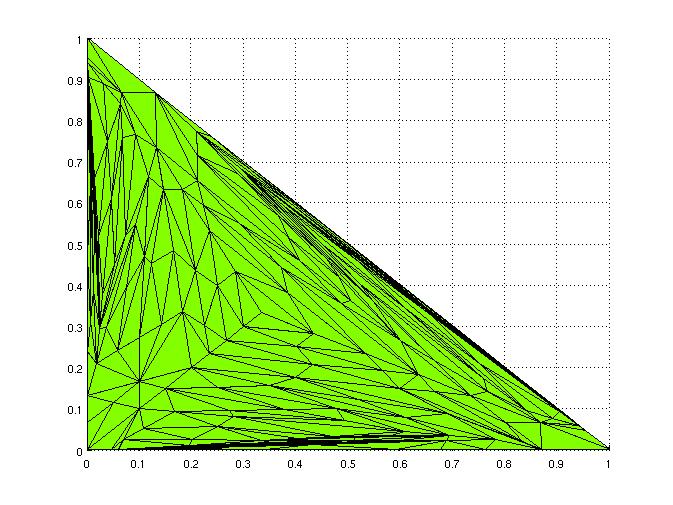

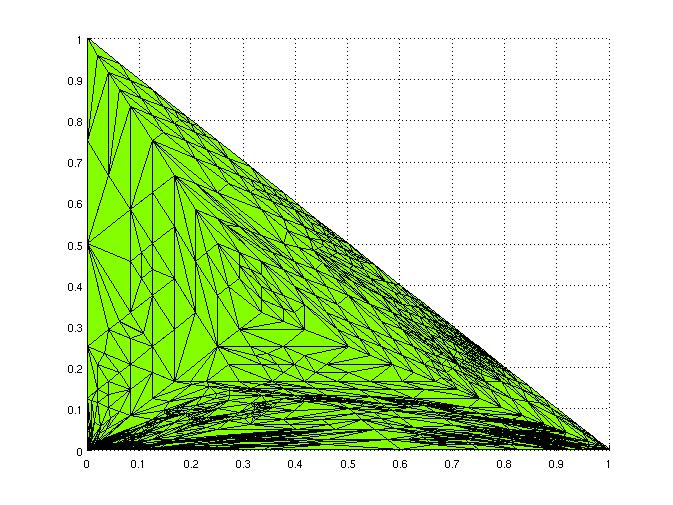

The figure below explains the main idea: one does not solve a given parameterized problem to optimality, but introduces instead new parameters along the way, as soon as the distance between already computed approximative efficient points has grown too large --a sure indication for a low-quality approximation.

It is possible show that, such method, if properly implemented

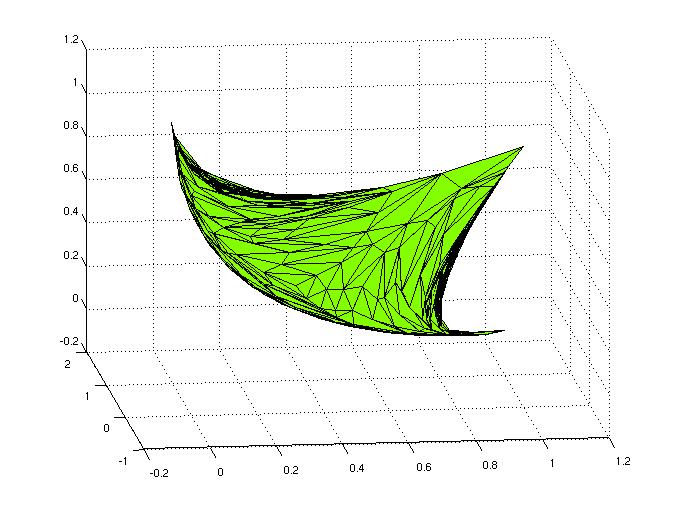

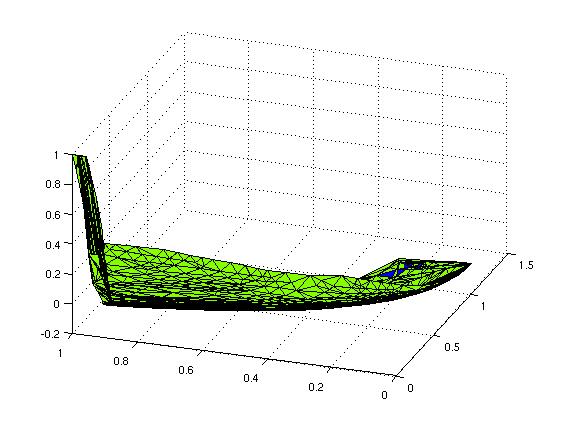

The picture on the left shows the discretization that automatically took place in the parameter (weight) space. (For three-criteria problems, the parameter space is two-dimensional.) The picture on the right show the corresponding image of the set of efficient points, i.e. the set whose approximation we need to construct. Clearly, the approximation is of high enough quality for a decision-maker to identify interesting regions in the value space and proceed with the decision-making process.

Finally, a real-world problem with three criteria. Again, on the left-hand side the discretization in the weight space. It is clear that different areas of the parameter space warrant different discretization qualities. Without an adaptive algorithm as described above, one would heavily waste computation time. On the right-hand side is the corresponding image of the set of efficient points.

Here is the Matlab .fig-file corresponding to the visualization above. (Generated with Matlab 7.2.0.294 (R2006a) on a Linux machine; that means that Matlab installed on a Windows machine will not be able to read the file.) Matlab allows for rotating three-dimensional images around in an interactive fashion, and this feature allows one to investigate the actual shape of the set in three-dimensional space.

Some publications of interest:

Jörg Fliege: "Newton's Method for Multiobjective Optimization".

Submitted. (With L. Mauricio Grana Drummond and Benar F. Svaiter.)

Available at Optimization Online.

Jörg Fliege: "An adaptive primal-dual warm-start technique for quadratic multiobjective optimization". Preprint 2006/34, School of Mathematics, The University of Birmingham, Edgbaston, Birmingham B15 2TT, UK. Submitted. (With Daniel Molz and Christoph Heermann.) Submitted. Available at Optimization Online.

Jörg Fliege: "An Efficient Interior-Point Method for Convex Multicriteria Optimization Problems". Mathematics of Operations Research, Volume 31, Number 4, pp.~825--845, November 2006.

Jörg Fliege: "A Multicriteria Optimization Approach to Bilevel Optimization". Journal of Optimization Theory and Applications, Volume~131, No.~2, pp.~209--225, November 2006. (With Luis N. Vicente)

Jörg Fliege: "A New Adaptive Algorithm for Convex Quadratic Multicriteria Optimization". In Jürgen Branke, Kalyanmoy Deb, Kaisa Miettinen, Ralph E. Steuer (ed.): Practical Approaches to Multi-Objective Optimization, Dagstuhl Seminar Proceedings, Schloß Dagstuhl, Germany, 2005 (with Christoph Heermann and Bernd Weyers).

Jörg Fliege: "A New Adaptive Algorithm for Convex Quadratic Multicriteria Optimization". Ergebnisberichte Angewandte Mathematik No. 252. Fachbereich Mathematik, Universität Dortmund, 44221 Dortmund. April 2004. Submitted. (With Christoph Heermann and Bernd Weyers.) Download a two-page summary or the report in ps, gzipped ps, or pdf-format.

Jörg Fliege: "Gap-Free Computation of Pareto-Points by Quadratic Scalarizations". Mathematical Methods of Operations Research, Vol. 59, Issue~1, pp.~69--89, 2004.

Jörg Fliege: "Generalized Goal Programming: Polynomial Methods and Applications". Mathematical Programming, Vol. 93, Issue~2, pp.~281--303, 2002. (With Emilio Carrizosa) Download abstract.

Jörg Fliege: "Constructing Approximations to the Efficient Set of Convex Quadratic Multiobjective Problems". Ergebnisberichte Angewandte Mathematik. No. 211. Fachbereich Mathematik, Universität Dortmund, 44221 Dortmund, Germany. January 2002. Submitted. (With Andree Heseler) Download abstract or the report in dvi, ps, gzipped ps, or pdf-format.

Jörg Fliege: "Approximation Techniques for the Set of Efficient Points". Habilitationsschrift. Fachbereich Mathematik, Universität Dortmund, 44221 Dortmund, Germany. January 2001. Download dvi-file, gzipped postscript-file, postscript-file, or pdf-file.